The Future of Data Lake Analytics: Our Investment in Upsolver

We are delighted to announce our investment in the Series B for Upsolver, the no-code data lake engineering platform for agile cloud analytics. Upsolver’s value proposition can be stated succinctly: they give their customers the benefits of both data warehouses and data lakes. By making it easy for query engines to interrogate curated data in the data lake, Upsolver delivers the ease of use of data warehouses without a data lake’s need for specialized data engineering knowledge to use and administer.

Understanding just how big a deal this is requires walking through the evolution of data warehouses and data lakes — and the unsolved problems created by each step in that development.

The Imperfect Past

To understand data lakes, data warehouses, and their differences, we need to go back to the first data lake, Hadoop Distributed File System (HDFS). Hadoop, the first widely used distributed data processing platform, was preceded and inspired by Google’s MapReduce research paper. Users put everything they had in HDFS expecting insights, but instead found a mountain of maintenance operations and a lot of technical effort to extract insights. AWS reduced some of that burden by providing a cloud service with EMR (Elastic MapReduce) but it was still too complex.

What held everyone back was that for all of these data lakes, you had to write code to ingest, transform, and structure data the right way in order to query it for insights. And not normal code, but distributed systems code known only to a few data engineers who were often over-subscribed and disconnected from the operational teams. This left out an army of analysts and administrators staffed in companies all over the world. Google saw this shortcoming and evolved MapReduce into Dremel, the precursor to BigQuery. Dremel advanced two key concepts: it was SQL-only and cloud-native.

To be cloud-native meant rethinking the architecture, which led to separating the system into two independent systems: a storage engine and a query engine. Today, we call this the separation of compute and storage. It translates into cost savings because you can independently right-size resources to fit your storage needs and your query needs. But more importantly, it results in better scalability and elasticity. You can throw lots of compute at these queries and get results back in seconds. Suddenly, you have an efficient query machine that analysts and DBAs can use.

The combination of cloud-native and SQL-only gave birth to cloud data warehouses (CDWs) that were easy to use and scaled well, but introduced a new trade-off. The underlying data was now locked in a proprietary vendor format inside the data warehouse. Use cases like machine learning that needed access to that data had to get it elsewhere, often at the cost of having to manage the complexity of decoupled compute and storage. The result has been that everyone is now using both data lake and data warehouse architectures. The data lake remained as a raw data repository that fed purpose-built systems such as cloud data warehouses, ML platforms, log analytics stores, and non-relational databases.

Upsolver made this trade-off unnecessary by abstracting away the complexity of separated storage and compute. Users can put their data lake and warehouse in the same place and govern them centrally. This is how many of the big tech companies like Google and Facebook operate today. They have a query engine for analysts and DBAs that operates through SQL and a storage engine that centally maintains all the data and makes it available to data engineers.

Data Innovation for the Rest of Us

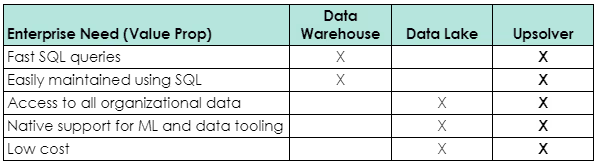

Upsolver has created a file system that is so well-optimized that it delivers CDW performance without any manual tuning and at a substantially lower cost. You can see just how much of a leap forward Upsolver represents in the following features table:

Upsolver does two very difficult things really well:

- Provide a no-code UI with drag-drop-click actions that generate SQL code to transform raw data into queryable structured data in the cloud data lake.

- Handle all of the background data engineering processes on those files automatically to make queries fast.

Many don’t realize how hard the second part is. Amazon maintains a list of all that you should do to your storage in order to make their query engine (Athena) work well. It’s a lot of work by a lot of people that could be focused elsewhere.

One Upsolver customer explained how coding one of these steps (compaction) for just one of his datasets was so much work such that he refused to do it for the other 47 datasets. Even if he completed this crusade, which he called “insane”, he’d still have only completed 1 of the 10 optimizations that were required. There was still compression, encryption, schema migration, partitioning, columnar optimization, query tuning, and on and on.

Upsolver customers describe this new architecture using words like “incredible” and “this is so awesome!” They called Apache Spark the “build” option, requiring a lot of work and technical depth; and Upsolver the “buy” option that worked out of the box. You can see this enthusiasm made real in how frequently Upsolver customers expand to more use cases as well as their remarkably low churn rate.

New Capabilities for a New Era of Analytics

If you take a step back and look at what has happened over the last decade it starts to look familiar. Years ago, the world settled on SQL for app databases and created RDBMS (relational database management system) to make all the complexity in managing transactional data go away and allow analysts and admins to operate them with ease.

The world of big data analytics is headed in the same direction. It has taken a decade or two to build the cloud technology foundation that allows for infinite scale and fast performance. Upsolver represents the fully automated, zero-trade-off big data RDBMS that analysts and admins have always dreamed of. As we step into the era of machine learning and data science, it couldn’t have come a moment too soon.

We are thrilled to join Upsolver’s journey in unlocking enterprise data and simplifying data management.

Eric Anderson contributed to our investment in Upsolver.

News from the Scale portfolio and firm