MCP is the new WWW

Over the past year, agentic AI has been loosely defined to mean anything from RAG to function calling and computer use. But what is an agent exactly? We now have an answer: agent = LLM + MCP.

Primer

For LLMs, MCP serves the same goal that web browsers accomplished for humans 30 years ago: it provides a standard mechanism to discover and access resources across the internet. In a world where humans consume websites, AI models will consume MCP.

Although LLMs could already interact with the world through tools like search, each new capability required (1) precisely defining to the LLM how to invoke it, and (2) implementing custom middleware between the LLM, the tool, and the user. With MCP, a list of providers (aka servers) is given to the application (aka the client). A unified format enables the discovery of capabilities, and a standard control loop enables input, output, and interfacing between model and external tooling.

Anthropic introduced MCP in November, and adoption is already widespread. The protocol has been embraced by all major players in the AI ecosystem, from OpenAI to Google and Microsoft. A specification for an authorization flow was introduced in March, and since then SaaS vendors like Stripe have started to publish servers.

Client-side: consuming MCP

MCP clients are everywhere:

- Chat apps: The first MCP client was Claude Desktop, with everyone else following suit.

- IDEs: Coding agents can benefit from access to every developer productivity and infrastructure tool, so it’s no surprise that IDEs have been early adopters of MCP.

- Agent frameworks: Adding integrations to agent frameworks via function calling has been a slow grind relying heavily on community effort. Instead, they can now simply plug into the MCP server ecosystem.

- Workflow orchestrators: Besides expanding tool availability, MCP has made it possible to add agentic “supernodes” to the deterministic pipelines of workflow orchestrators. This is a departure from the pre-MCP world where AI access was just a step in a prescribed flow.

The last client to mention is (surprise): YOUR PRODUCT! OpenAI just added support for MCP in their API, effectively turning the chat completions endpoint itself into an MCP client. Now a single parameter change is the only thing standing between your application and the entire universe of MCP servers… mostly.

The main issue is that there are well-recognized security gaps. Here are some examples:

- The agent might go to the wrong tool and exfiltrate data in its query. This could happen due to mislabeling from a malicious actor, or due to confusion between friendly but similarly-named servers and/or tools.

- MCP clients are given instructions by the servers on how to use their tools. These are sent to the LLM in the context. That mechanism can be leveraged by attackers to take undesirable actions with the other tools available to the agent.

- Even if an organization carefully vets what servers an agent can access, the server can freely change the tools and instructions it hosts at any time: the safety guarantee is ephemeral.

Another limitation is that it’s unclear how to find MCP servers, predictively register them with the client, set up authentication, etc. In essence, we’re still missing a “Google” for this new WWW. Fortunately, some effort is already under way.

We think that sophisticated organizations will end up in more centralized setups where a single entry point can enforce policy and provide discoverability. One solution to the security problem, which also addresses the discovery question raised earlier, is “app stores” of MCP servers.

Server-side: producing MCP

In the future, an important consumer of your product will be the AI agents we just talked about. These agents will not exist in a chatbot inside your website or app, but rather in a unified interface that can query every product your customer wants to leverage. A single pane of glass (like ChatGPT) is not only a better user experience than a myriad of standalone chatbots, but it also increases the complexity of tasks that can be accomplished. The recommendation we emit is, therefore, to join this ecosystem. Agents will not need a frontend, they’ll want MCP. So the most important MCP server for your business is: YOUR OWN!

It’s an attainable goal. For starters, you’ve probably been hearing (for years) about the need to (a) transition to a headless content management system and (b) expose a fully-functional public API. If you’ve already done those things, you’re well on your way. For the final step, there are a few challenges to tackle:

- MCP is an API. Don’t forget to implement auth (every MCP server is an OAuth server!), quotas and rate limiting, and all the other things you have to worry about with APIs (plus more because it’s a stateful protocol).

- But MCP is also not an API. If the agent is told to integrate 10 different SaaS vendors, each of which has 20 separate API endpoints, it won’t know what to do. Naïvely converting an API spec to an MCP server will make it unusable.

- The idea of complete standardization sounds great in theory, but in practice it’s still the wild west of MCP clients. If you ever had to make a website work for Internet Explorer, you are familiar with this pain. Things will get better, but just like browser compatibility, it likely won’t ever be fully solved.

Fortunately, we believe a new generation of “picks and shovels” providers is emerging to help fill these gaps.

The ecosystem

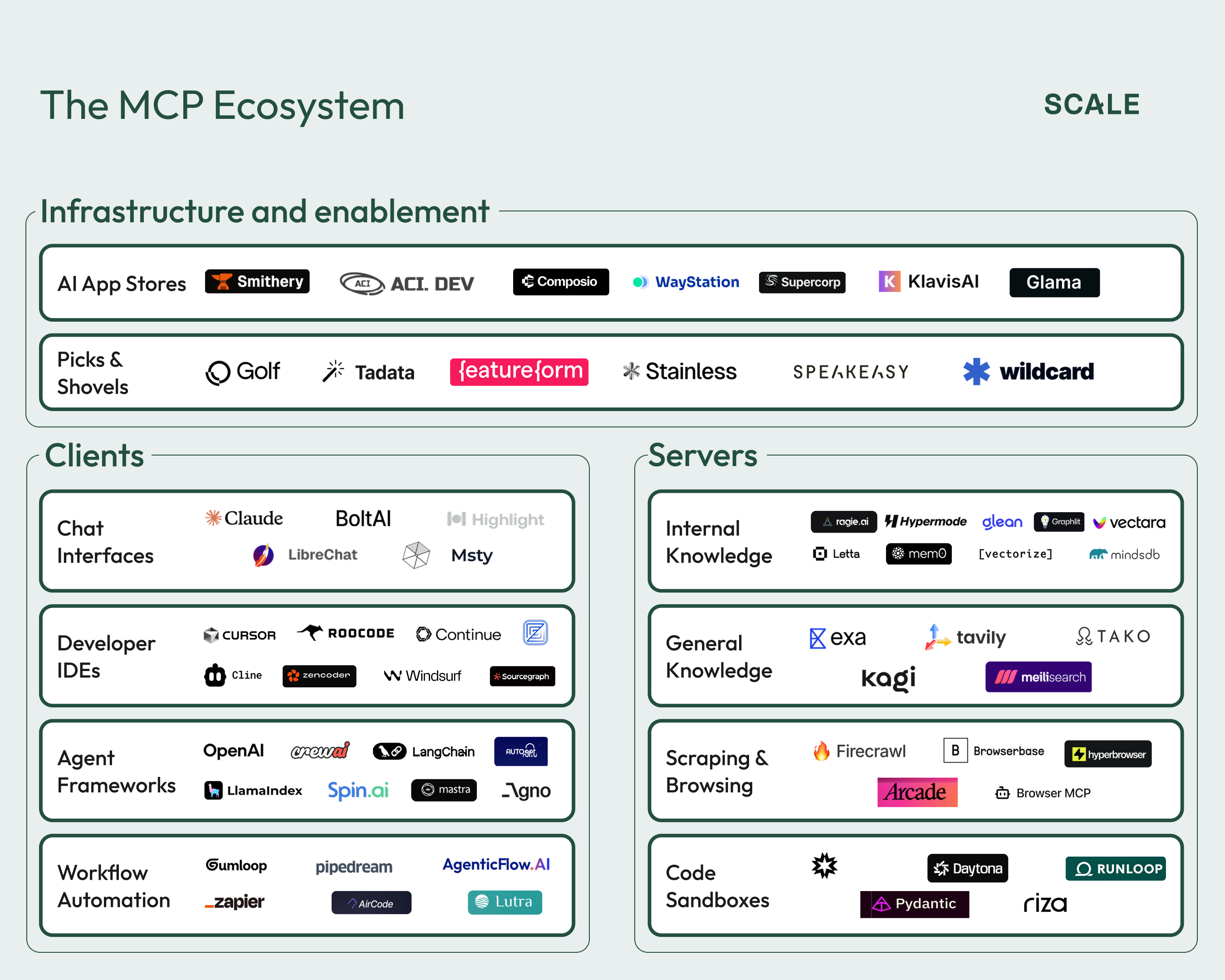

The MCP ecosystem is already very rich, especially given how new the technology is:

Get ready

MCP is here to stay, and it’s unlocking agentic AI. We are extremely excited about the ecosystem of companies leveraging MCP in their products and enabling access to MCP for others. If you’re a builder in the space, reach out to javier@scalevp.com!

News from the Scale portfolio and firm