Great writing is a 10x tool with a powerful higher calling.

Warren Buffet’s annual letters (that he drafts with his sisters’ names in place of “Dear Shareholder”) are mythologized in the business world for the clarity with which he describes his strategy. Jeff Bezos publishes a similar annual letter at Amazon – and the company requires executives to draft a 6-page memo where peers might use powerpoint. The narrative structure required by great writing benefits both the author and the audience, and it’s a profoundly human skill, in that great writing has rhythm that deviates from the general/expected.

But this higher calling is a fraction of the business writing domain. Produced in much higher volumes are marketing copy, emails, performance reviews, code documentation…. the list goes on. In the business domain, most wordcount is a nesting of predictable niceties for the key point(s).

Writing this content is a desk worker’s low-skill labor, demanding little skill but lots of time. (The quality of GMail autocomplete, introduced in 2018, illustrates just how repetitive business writing is.) It’s this attractive target that makes Natural Language Generation (“NLG”) products so exciting, because this new technology has finally grokked the patterns interwoven in our prose.

NLG products are newly feasible, enabled by linguistic “transformer” models like GPT-3 from OpenAI and Jurassic-1 from AI21 Labs. These models are trained on enormous repositories of text drawn from the internet. As the AI models train, it breaks text up into “tokens,” which largely correspond to legos (“tokens”) for words. When a user prompts a model to author, it executes this by evaluating the text that comes before the cursor and probabilistically calculating what word most fits next (–in ongoing succession).

When GPT-3 was first released in May 2020, the technology’s promise seized the minds of hackers. Two years later, the demand from the broader population for NLG products is resounding.

Marketing copywriters have been the quickest to adopt NLG products.

It’s a highly predictable genre, and one in which the tradeoff between volume of content and thoughtfulness of content leans most toward the former. (Think about the typical “Top 10 Tips” SEO blogpost as an example.) Existing linguistic models can do this job out-of-the-box and perform especially well with a little “prompt engineering” of out-of-the-box models. Early winners in serving this marketing copy market, like Jasper.ai, serve this use case with an inviting user experience, tools for prompting the underlying model, and a strong onboarding experience.

Increasingly, NLG is being built into broader systems of engagement as a hook or differentiator, as end-user adoption of the technology expands from early adopters to the early majority. For example: while copywriting tools best serve NLG’s early adopters, content marketplaces like Pepper and ContentFly are bringing NLG to the early majority by providing Jasper-like features to already active users of a broader suite. (These companies are two SaaS-enabled marketplaces for buying copywriting services and conducting the draft/review/feedback cycle; each has begun to offer their own GPT-3 based NLG product baked into the marketplace-accompanying product.) Here, the value of the NLG product is synergistic with the value of the broader marketplace and SaaS product suite.

I expect that NLG tools for new use cases will successively deeper integrate with relevant systems of engagement to serve broadening adoption, as well as better serve demands for (1) adherence to a genre and (2) programmatic personalization. By genre, I mean the structure that pops into your mind if I say “cold sales email” or “legal motion” or “employee performance review.” Interweaving with the system of engagement describes bringing NLG tooling to the place where the buyer currently does –or can do– most or all of their task. Personalization is the weaving of an intended recipient’s identity into the composition.

What’s a next step from marketing copy? It might be email writing, where a few cool products are emerging.

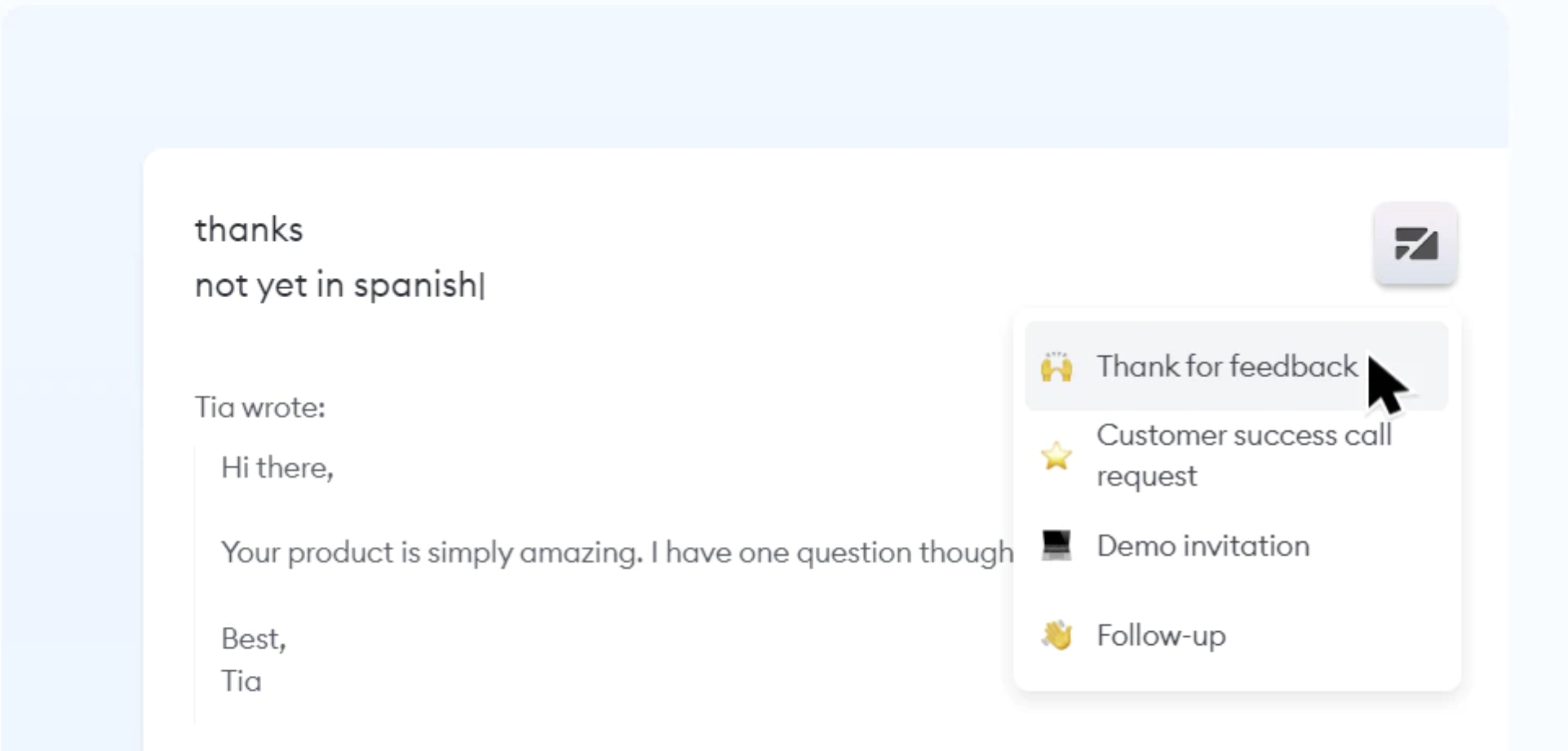

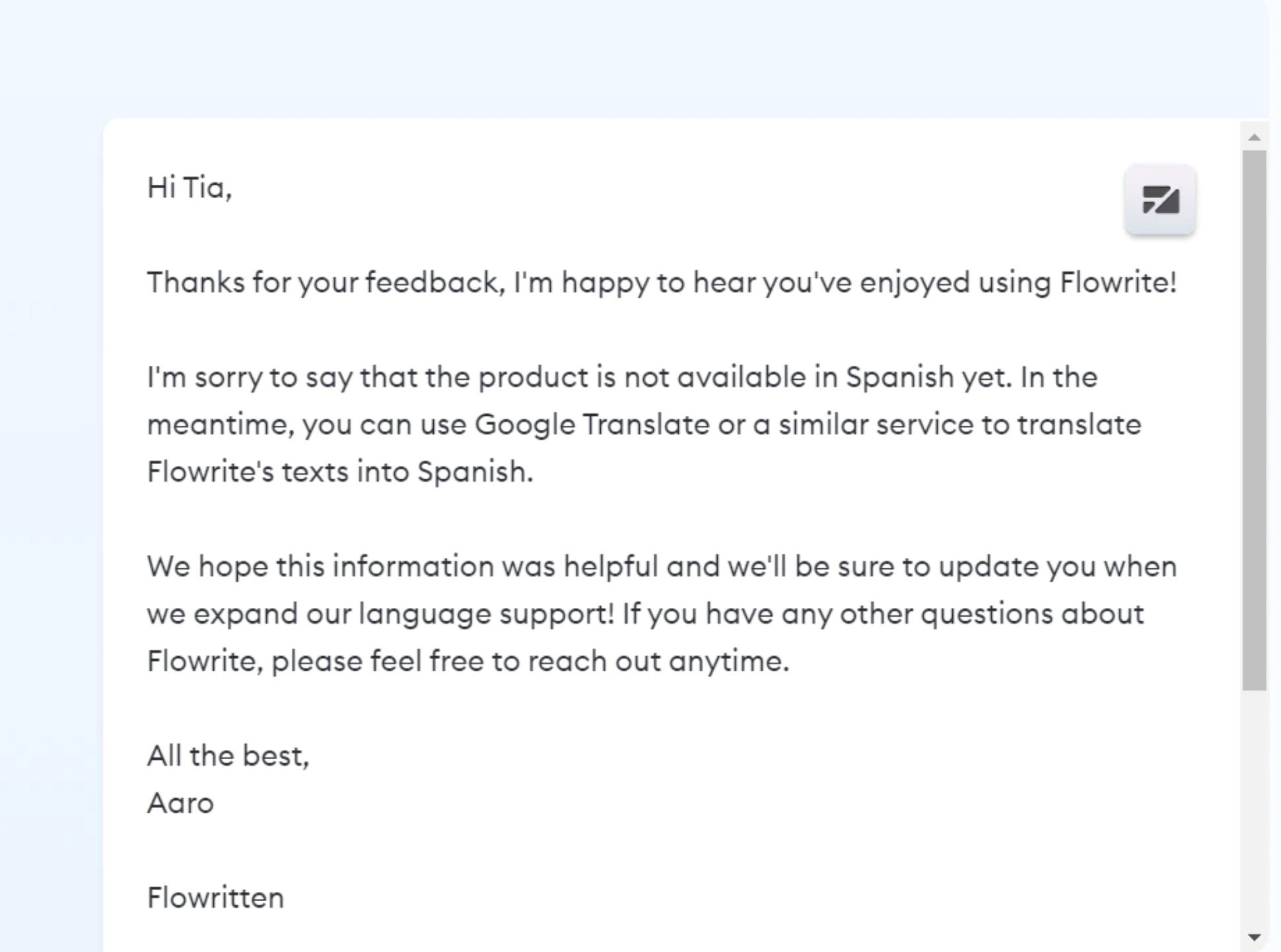

One example is Flowrite, a Chrome-plugin for “supercharging daily communication,” enabling the user to select a genre and tap out a few key points which the product turns into a composed email with all the right niceties and nesting. These genre selections, a sort of productization of the priming process, are available in a pre-stocked gallery that can be further enriched by the user. The personalization element is handled here through the few commands a user inputs, like “Interview next tue at 4p via Zoom?”

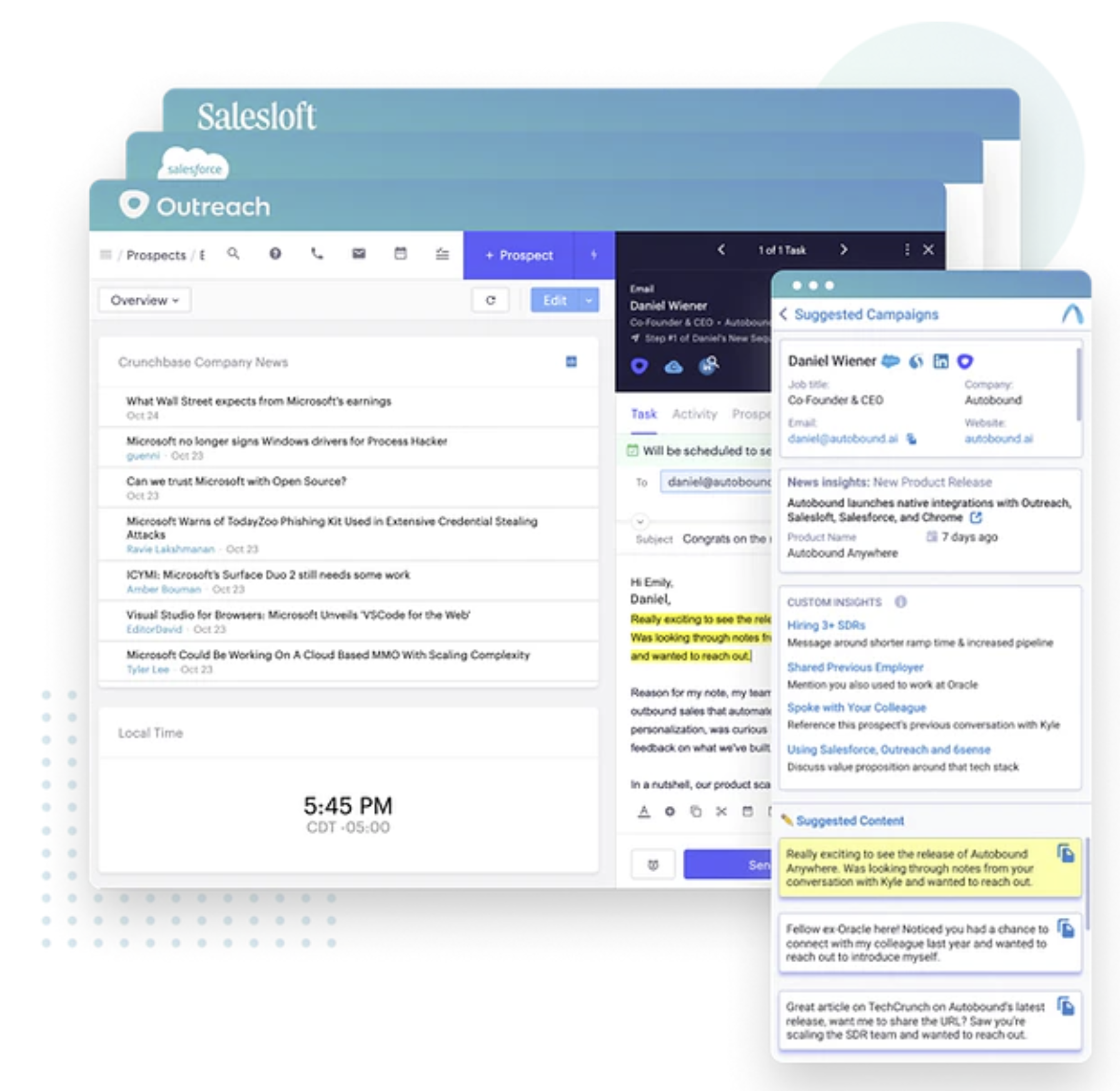

Autobound is another startup totally focused on sales emails, so its problem domain is personalization at scale (–the genre is fixed). The product pulls third party sources for company news and prospect biographics and feeds this, as well as CRM data, into the underlying NLG model. Various cold email drafts are output and provided to the salesperson with in a sidebar within their sales system of engagement (e.g. Salesloft or Outreach).

As product builders look to move into increasingly specialized domains, it will require “fine tuning” (customizing off-the-shelf models with additional text repositories) to get deep into the domain’s genre and lexicon. But with such training, it’s easy to imagine really cool use cases. Maybe a tool for writing legal motions that are quotidian but not so template driven as to just be fill-in-the-blank. Perhaps a product that takes as input…

- Motion to Seal

- Rationale is that the document in question contains trade secrets

- The document in question was shared with the counterparty under NDA

- Cite Acme Corp vs Beta Corp, 62 F. Supp. 2d 463 (D.P.R. 1999)

…and produces a submit-able motion with certain portions highlighted for human proofing.

Equally cool as a next step is leveraging these natural language models’ capacity to summarize as an input itself. One example that grabs me is Stenography, a tool that reads programmer-written code and generates accompanying plain-English commentary. Viable is another example; the product transcribes and then summarizes user feedback in a voice tailored to C-suite, product, or CX/UX teams.

Natural language generation is a particularly cool “wow” example of the way that software is increasingly doing work (with a human in the loop) where it had once just served as the place to do that work. It’s easy to imagine many of the biggest tech exits a few years from now baking language models intro a broader problem-solving software product to make their users even more more efficient and productive.

Originally posted on Friday Meeting Scratchpad, Max Abram’s Substack.