Where’s my AI banker?

If you’re wondering why AI hasn’t radically transformed financial services yet, you’re not alone. At a recent dinner with leaders from top banks and cutting-edge fintechs, enthusiasm and investment in AI are sky-high, but the gap between promise and real-world progress remains wide.

Financial services is one of the most data rich, process-heavy industries, with armies of humans waiting to be replaced by AI. Yet real adoption lags. Why? Everyone blames big banks for being bad at buying tech or claim they just have long sales cycles. The reality is much more nuanced.

This industry contains some of the most sophisticated institutions when it comes to pre-LLM AI. For decades, banks and fintechs have been building and refining advanced machine learning models across a wide range of functions. These systems are mature, deeply embedded, and in many cases, already highly performant. As a result, the bar is uniquely high for LLMs to come in and show a step function improvement vs. in other industries like legal where LLMs enabled them to go from 0 to 1.

Banks don’t just need working models. They need deterministic, explainable systems that never hallucinate, operate within complex regulatory constraints, and integrate with sprawling legacy infrastructure. Data quality remains a silent killer, risk tolerance is uniquely low, and trust in machines hasn’t caught up to trust in fallible humans.

Yet despite the roadblocks, there’s real momentum and reason for optimism. Startups are steadily chipping away at tangible, high-impact use cases. The pace of model improvements, combined with a surge of entrepreneurial energy across every layer of the financial stack, has many in the industry excited. Your AI banker isn’t here yet, but it’s undeniably underway. But, there are barriers startups need to overcome.

The culture + compliance trap

AI has a perception problem. Everyone expects humans to be flawed but machines to be flawless. When Waymo crashes once in a million miles, it’s a scandal. When humans crash thousands of times a day, it’s business as usual.

This double standard is especially tough in financial services, where errors are deemed just as unacceptable as they are on the road. In a compliance-heavy industry, hallucinations and mistakes have regulatory consequences. Regulators are far more comfortable with a person making a mistake than a machine. A human can be reprimanded, retrained, or replaced. But if an AI system makes the same error, the accountability is murky, and the regulatory risk skyrockets.

This higher bar creates a chilling effect. If an AI-powered system can’t guarantee perfect performance, will it ever see daylight? The result is often fewer deployments, fewer data points, and slower organizational learning.

What’s the way forward? There’s no clear playbook yet. Some are testing human-in-the-loop approaches, letting AI handle high-volume, low-risk tasks (like triage, summarization, or first-pass decisions), with humans providing oversight, final approvals, and handling the more nuanced cases. Others are exploring frameworks that make AI output more explainable, reproducible, and auditable by design.

Ultimately, the psychological bar for AI needs to come down, but the systems also need to be built not just for regulators, but for humans. It’s not enough for a model to work. It has to feel trustworthy. Imagine a loan officer telling a customer, “Sorry, your application was rejected and I can’t explain why.” Or a manager justifying a failed transaction for a high-net-worth client because the algorithm hallucinated. Those moments create organizational discomfort and brand risk that no amount of technical whitepapers can smooth over.

Until companies design AI that’s not only compliant, but explainable, intuitive, and culturally acceptable, much of the innovation will remain stuck in pilots.

Garbage in, garbage out

Banks are sitting on decades of unstructured, inconsistent data trapped in archaic architecture. Legacy systems weren’t designed for AI-scale workflows, and the result is a mountain of effort required before even getting to deployment.

Many institutions only realize the true state of their data when they try to implement an AI solution and it starts spewing garbage. The glossy demo works beautifully in a sandbox. But in production? The model runs into incomplete records, legacy formats, conflicting systems, and mislabeled inputs.

Years of accumulated tech debt, fragmented infrastructure, and unclean data suddenly become front and center. An AI compliance or underwriting solution doesn’t fix your data problems. It reveals them. If a model is trained on incomplete, conflicting, or outdated inputs, its reasoning becomes murky. And without a clean, connected foundation, even the best models will underperform and fall short of producing a clear audit trail.

This is a business problem long before it’s a regulatory one, although “pointing to a black box and shrugging” won’t cut it with regulators either.

This creates a double bind – the model must be both accurate and explainable, but poor data quality makes both nearly impossible. When a pilot is run on messy, fragmented data, the results are underwhelming, often not because the AI isn’t capable, but because the inputs are broken. Yet instead of recognizing a data problem, organizations often conclude the AI doesn’t work. Without a clear win, there’s no business case to invest in fixing the data and without better data, the next pilot fails too. The cycle stalls before real progress can begin.

This failed loop has long-term consequences. AI systems improve through usage. But when pilots fail and models never make it to production, there’s no iteration, no refinement, and no learning. It creates a self-fulfilling cycle: bad data leads to poor performance, which kills the case for investment, ensuring the model never gets better. A feedback loop of failure.

Until institutions treat data quality as a prerequisite, even the most advanced AI systems will struggle to meet the bar for accuracy, explainability, and compliance. The flywheel for improvement never gets a chance.

Firing is hard, “not hiring” or “new things” are easier

Incumbent financial institutions have massive teams, established workflows, and deeply entrenched processes. Swapping out a 100-person team for 50 AI-powered humans isn’t just difficult, it may be politically and operationally unrealistic in the near-term.

But doing more? That’s possible.

This is the “AI expansion” lens. Instead of the pitch being to replace people, it’s easier to help incumbents scale what’s possible. AI can handle tasks these institutions could never justify staffing before. Want to give every client (not just the ultra high net worth ones) a personal relationship manager? That’s impossible with humans, but maybe not with AI. Imagine every time you call your bank you always get Joe. Joe knows your history, preferences, accounts, and goals. Joe never transfers you. Joe never gets sick.

For fast-growing companies, the equation looks different. It’s not about layoffs, but about not hiring in the first place. Teams that might have doubled headcount to keep up with demand are now using AI to avoid opening those new reqs at all. Instead of growing from 50 to 100 people, they’re investing in tooling to increase leverage per employee. While the end result may look the same as firing, it is vastly different to these organizations. This is often why high growth banks and fintechs are typically the early adopters.

For startups selling into this market, “help you avoid hiring” or “do something that isn’t possible with humans” is a much easier pitch than “replace your team.”

The ROI calculus has a hard threshold to clear

This industry didn’t just start using AI. It’s been using machine learning for over a decade at scale. JPMorgan invests $12B annually in tech! Sophisticated ML systems already power critical functions like fraud detection, credit scoring, transaction monitoring, and sanctions screening. These aren’t legacy workflows screaming to be replaced, but deeply embedded, high-performing engines that have been optimized over time.

In financial services, the question is whether AI can outperform systems that already work very well. That’s a drastically different starting point than other industries like legal, where pre-LLM AI adoption was near zero.

Then there is the question of LLM applicability in finance. ML is very good with numbers and tabular data while LLMs are prime for text. LLMs are also probabilistic. Finance at the end of the day, is mostly numbers and incredibly deterministic.

Legal had no AI solutions before and is an incredibly text rich industry, LLMs were a natural fit. As a result, the adoption of AI solutions has been massive there over the last few years. In contrast, the bar is simply higher in the financial world. There is clearly going to be a role for LLMs here, especially given the rapid pace of progress of the underlying models and startup innovation. But the ROI has to be more compelling.

Where the opportunity lies

Despite the hurdles, we remain bullish. Financial services represents over 7% of the U.S. GDP, employs ~7 million people, and spends hundreds of billions annually on IT. It’s one of the largest, most foundational industries in the economy. And it’s ripe for change.

Payment rails are evolving. Consumer expectations are shifting. Fraudsters certainly aren’t waiting for regulatory approval or clean data, they’re adopting every AI tool immediately and iterating fast. Financial institutions and regulators need to make bold decisions. AI is no longer optional, it’s becoming a necessity to keep up.

The scope and inefficiency is enormous. For startups finding success here, we’ve seen the following key vectors:

- It’s challenging to replace headcount at big slow growing incumbents. Selling to fast-growing companies that are eager to use AI to avoid hiring another 50 people is easier.

- Targeting areas with less historical tech investment, high manual volume, and relatively low risk. Financial services may be a pioneer in ML for underwriting and fraud, but that sophistication isn’t evenly distributed across every functional area. Look for the weak link in the historical solutions.

- AI is unlocking entirely new ways of serving customers, operations, and managing risk. Instead of incremental adds to the stack, net-new solutions can be adapted rapidly.

- The budgets at the top global banks may be massive, but that’s a good and bad thing. These top organizations have a natural proclivity to build before buying. There are 5k regional & community banks and 5k credit unions that have a small fraction of that budget and rely on 3rd party vendors. That’s how Q2 and nCino originally went to market and built their core businesses.

- It’s also worth noting that some of the most compelling fintech innovation isn’t happening in the U.S. In markets like India and Brazil, government-led initiatives like UPI and PIX have driven massive adoption of real-time payments and open banking. These platforms are reshaping consumer behavior and enabling a wave of startup activity built on modern rails.

AI at work today

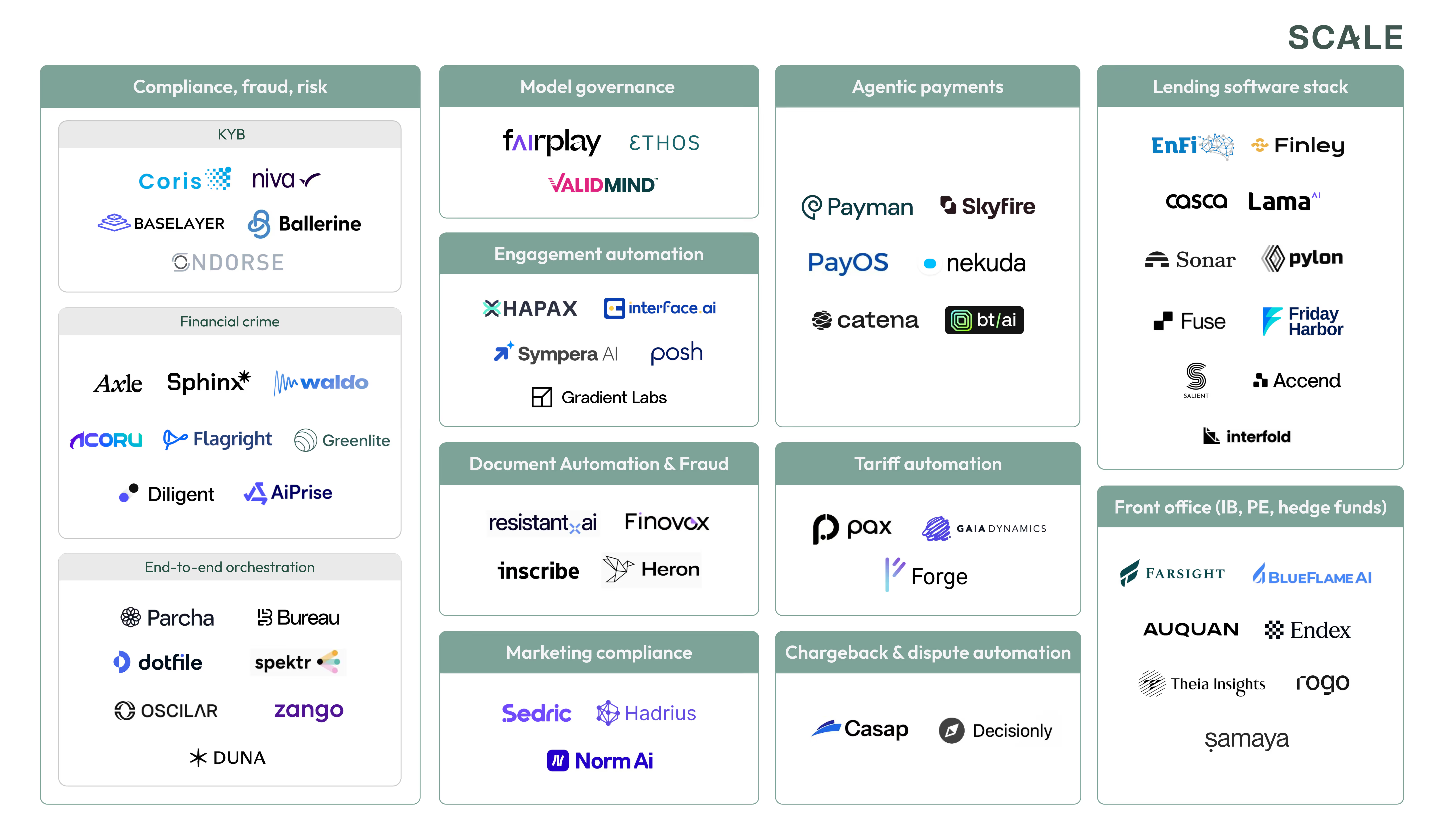

Despite the roadblocks, we’re seeing meaningful traction in very real, very messy parts of financial services. This isn’t meant to be exhaustive, but to illustrate that there are real AI applications today making a difference and this is just the beginning.

We’ve been lucky to work with some of the best already

At Scale, we couldn’t be more energized by the pace and breadth of innovation we’re seeing in financial services. We’ve been fortunate to invest and work with long-standing leaders like Bill.com, Forter, Socure, and Papaya Global. Over the last few years we’ve partnered with innovators around insurance underwriting (Sixfold) and AR automation (Monto). More recently we invested in Klarity AI who is automating document intensive finance and accounting workflows and Abacum who is giving modern finance teams the FP&A platform they’ve always dreamed of. The next wave of category-defining companies won’t just plug into legacy systems, they’ll reimagine how the entire stack operates with AI at the core.

If you’re building here, give us a shout!

News from the Scale portfolio and firm