Stream processing becomes mainstream

Like counts, a ranked feed, or fraud alerts are examples of user-facing analytics and the result of complex transformations on vast amounts of raw data that capture user activity. Although user-facing analytics are increasingly present across modern applications, most companies still struggle to deliver them in real-time and at scale. As a result, many businesses miss the chance to deliver a personalized user experience and react to changes quickly.

Event streaming platforms like Kafka, Pulsar and Kinesis are already able to capture raw real-time data at scale. They have gained widespread adoption in the context of microservices, whose proliferation has made point-to-point communication between systems inefficient. Using event streams to centralize data exchange–while important–only scratches the surface of the value that they can provide.

We believe that we are at an inflection point where stream processing will become the standard that powers user-facing analytics. The growing importance of the use cases enabled by stream processing is being met with solutions that are increasingly easy to adopt. As investors in this space, this is a trend we’re following closely.

A new architecture for user-facing analytics

Traditionally, companies wanting to deliver user-facing analytics have had to compromise by choosing an imperfect architecture. Here are some common examples:

- The application can query the OLTP database (e.g., PostgreSQL). → Problem: The OLTP database cannot handle this beyond a few records at a time (not scalable).

- The application can query the data warehouse (e.g., Snowflake). → Problem: The query takes too long to run when dealing with vast amounts of data (high latency).

- A job (e.g., Spark) can periodically pre-compute values and reverse-ETL the results to a NoSQL database (e.g., Cassandra) for serving. → Problem: Reading from the database is fast, but due to batching the data seen by the user is stale.

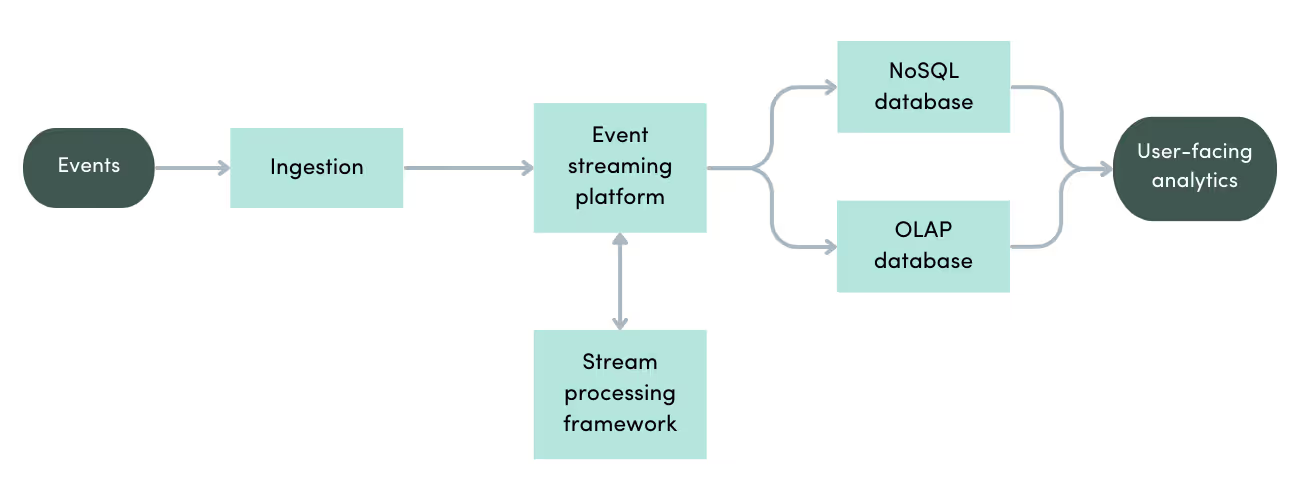

Although no two organizations are the same, we have observed that companies who deliver best-in-class user-facing analytics have generally adopted an architecture that resembles the following:

Let’s go through these components one by one:

Ingestion: This layer is responsible for detecting the state changes associated with events and producing them to the event streaming platform. Developers can build ingestion into their application by using low-level APIs or high-level, configurable tools (e.g., event trackers, CDC systems).

Event streaming platform: This layer provides a highly-scalable, fault-tolerant system that ingests multiple event streams, stores them, and enables other systems to asynchronously consume them. As mentioned earlier, this is a mature segment with established players like Kafka, Pulsar, Redpanda and Kinesis.

Stream processing framework: This layer consumes raw events from the event streaming platform, performs analytics, and produces usable events back to the platform. Stream processing is the focus of this blog post.

NoSQL database: This layer enables serving. A common workflow is to compute metrics in the stream processing framework and then perform upserts on a table in the NoSQL database as the events are produced to a stream. This is also a mature segment with big players like MongoDB, DynamoDB and Cassandra.

OLAP database: Raw events can be streamed directly into an OLAP database, which is designed to run sub-second aggregations on vast amounts of data. This layer can therefore complement stream processing by enabling use cases where the metrics required by the application are not known in advance, i.e., when it’s better to compute on demand rather than continuously in advance. In many cases, it can also replace the NoSQL database to act as the serving layer. This is a growing segment with powerhouses like Pinot, Druid and ClickHouse.

Multiple catalysts are transforming the market

The trend towards more user-facing analytics delivered in real time was pioneered by large tech companies, who wanted to deliver better user experiences and react to change quickly. Although real-time architectures have been notoriously hard to adopt, we see a number of catalysts playing which will pave the way to mainstream adoption.

First, the current wave of solutions focuses on SQL and Python, which are familiar to the key constituents in the development of user-facing analytics: data scientists. By contrast, the original stream processing frameworks were built on top of JVM languages (Java and Scala).

Second, the transition from self-managed deployments to SaaS stream processing platforms has made it possible to adopt these technologies with limited operational pain and at a smaller scale. Previous iterations required significant resources to manage the many moving pieces needed to deliver high availability (e.g., ZooKeeper) and imposed a large minimum footprint to amortize the cost of a solution designed for a very large scale. Additionally, for organizations not willing to adopt SaaS, similar gains have been made possible with the use of Kubernetes.

Last (but not least), the introduction of tiered storage across event streaming platforms has decoupled storage and compute, providing infinite retention and eliminating bottlenecks in data retrieval. In the past, companies would have had to use batch (e.g., Spark) for backfilling alongside the real-time layer. Operating two systems and maintaining two codebases (the lambda architecture) was prohibitively complex and expensive. With tiered storage, it’s possible to fully eliminate the batch layer and rely exclusively on a real-time stream processing framework.

Mapping the market

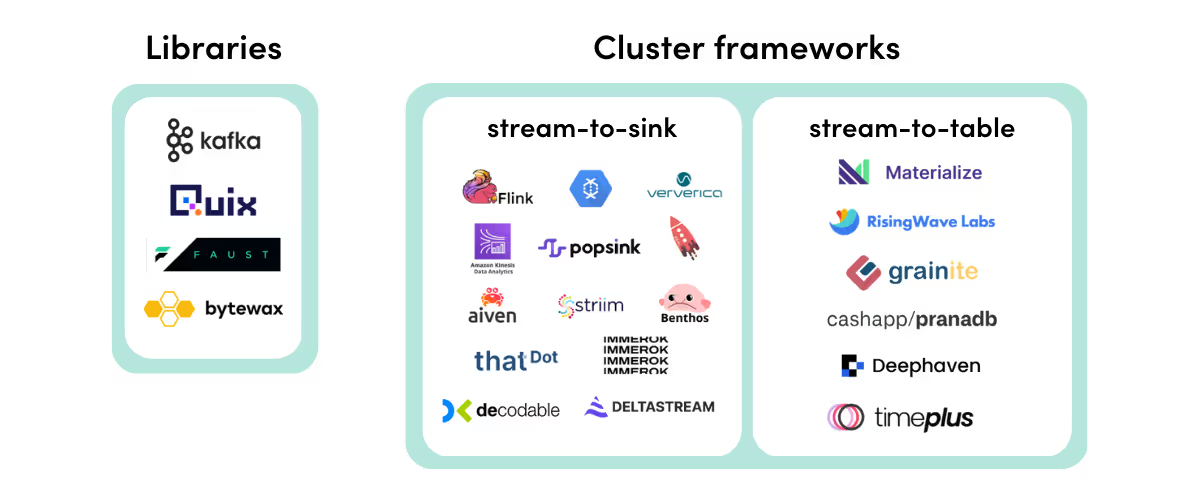

Developers and data scientists who want to adopt stream processing can follow different approaches depending on their requirements.

The most important decision is how the application runs:

Libraries simplify the development of stream processing applications by providing higher-level abstractions on top of the low-level produce/consume APIs exposed by event streaming platforms. Among others, in particular, libraries incorporate stateful operators that enable applications to produce aggregates. Under this model, the developer embeds the library into an application which is deployed and scaled by them.

Cluster frameworks provide a fixed infrastructure that takes responsibility for persisting state and scheduling applications that execute as long-running jobs. As a result, the operator managing the cluster is responsible for ensuring that it meets the demands of the application.

Cluster frameworks themselves tend to fall into two categories:

- Stream-to-sink frameworks do not persist data within the cluster itself (other than internal state). In this model, output is piped back into the event stream or, in some cases, a different sink (e.g., blob storage, data warehouse). An independent serving layer (e.g., NoSQL database) is required.

- Stream-to-table frameworks also create tables (or materialized views) that can be queried externally. Depending on the implementation, tables might be stored only in memory or persisted to disk. This doesn’t preclude the developer from also outputting to a sink and/or optionally adopting a standalone serving layer.

Despite these rather clear-cut definitions, the truth is that many stream processing solutions do not strictly fall under one definition–within each category, there are also major differences between the different implementations and providers. This “cambrian explosion” of stream processing architectures suggests that many designs are being evaluated against different customer requirements, which will lead to exciting progress in the space.

For the purposes of this blog post, we have categorized existing products and vendors as follows:

The future of stream processing

There are two key reasons for founders and investors to be excited about the stream processing space:

The first is that event streaming platforms like Kafka solve stock (storage) and flow (network) problems; but stream processing provides analytical capabilities (compute). Because the resources spent on compute tend to be larger, we think that stream processing has the potential to be an even bigger market.

The second reason is that event streaming platforms expose standard produce/consume APIs, which enables stream processors to be developed independently. This decoupling means that it is possible to build a stream processing system that works with the various event streaming platforms. As a result, stream processing systems can be developed as standalone products, leaving room for new entrants and innovation.

We’re really excited about how the next generation of stream processing providers is unlocking access to real-time user analytics. If you’re a builder in this space and like our thesis, we’d love to hear from you!

News from the Scale portfolio and firm