This article on Generative AI was co-authored by Jeremy Kaufmann and Eric Anderson with contributions from Max Abram and Maggie Basta.

Imagine it’s 2002 and you are an early engineer at Salesforce. There is no Dreamboat offering free hotel rooms, no Dreamfest featuring famous musicians, and not even a single Dreamforce cocktail party to add to your social calendar (gasp!). Instead, you spend the majority of your time building and maintaining data centers that span the globe, finagling hand-crafted orchestration layers to interact with the various data centers, and in the few remaining hours of the day, designing a complex SaaS product that sits on top of this messy stack. Hard to fathom, right?

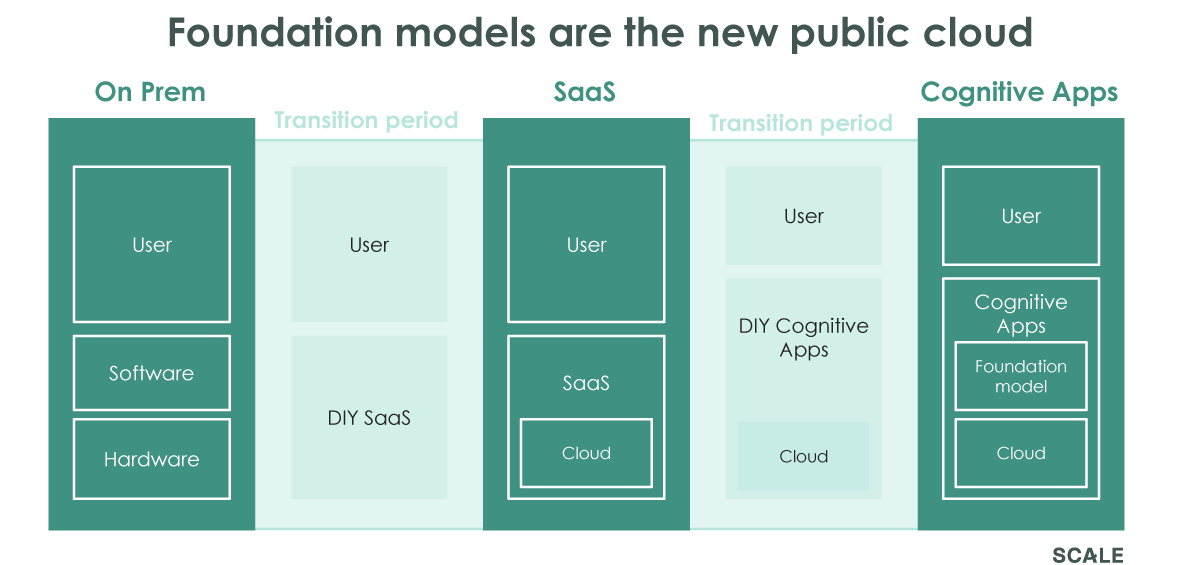

While it’s almost trite to dredge up the historical evolution from on-prem to SaaS at this point, many of us have forgotten that there was in fact a short-lived interstitial era of “roll-your-own SaaS.” This transition from on-prem to cloud-based SaaS didn’t just happen overnight, rather there were seven years between the founding of Salesforce (1999) and the launch of Amazon Web Services (2006) where startups building SaaS-like experiences had to do it all on their own, without any help from the public cloud vendors. Which explains why being a Salesforce engineer in 2002 was way less glamorous.

So what does this bygone era of roll-your-own SaaS reveal about the world today? Replace “SaaS” with “Machine Learning,” and we’d argue we are broadly witnessing that very same evolution today.

Over the last few years, building an AI startup used to require “do-it-yourself AI,” which consisted of gathering training data, labeling it, architecting complex data transformations, tuning hyperparameters, and selecting the right model. It was a herculean task, similar in complexity to the workload of the Salesforce engineer above. But in the last year or two, foundation models have emerged as a time-saving shortcut that enable entrepreneurs to do more faster. These foundation models aren’t specific to particular AI use cases, but are largely general and have something to offer almost anyone. Entrepreneurs can now decouple parts of the training data and model (which comes pre-packaged in a foundation model) from the application layer, which we at Scale call a cognitive application.

Looking Backwards: What We Got Right and What We Got Wrong

Two years ago, Scale’s Andy Vitus first introduced our Cognitive Applications thesis which detailed how machine learning would change the way we both build and use software. Reading that piece now, we’re struck by how strongly we continue to believe that adding intelligence to software represents the dominant paradigm in enterprise investing over the next decade. And also by just how understated the tone of the original piece seems in retrospect. It did not envision a future where ordinary people would generate art with textual prompts, or where natural language would begin to function as a credible UI for interacting with software.

So what exactly did our prior thesis leave out? For one, it missed this transition from the era of “do-it-yourself AI” to foundation models, much like a Salesforce engineer in 2002 would have never imagined how much of her job would be outsourced to the public cloud vendors by 2010. But it also missed that the fundamental use cases for machine learning would vastly expand beyond understanding the world to generating the world.

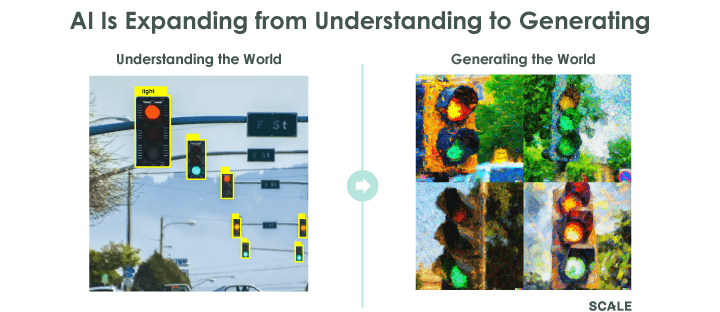

The Transition From the Era of Understanding to the Era of Generation

It’s been truly amazing to watch over the last year just how rapidly machine learning has expanded beyond “the era of understanding” into a new “era of generation.” Over the last several years, ML models largely performed tasks of understanding (classification, entity extraction, object recognition, etc), across disciplines like speech, language, and images. Compare that to the emerging generative AI applications where computers actually create novel text and images.

In the era of understanding, there was basically one mode of operation. We called it inference, because it was about converging on a conclusion, usually in the form of applying a label. It was basically all “Hot dog or Not Hot Dog.”

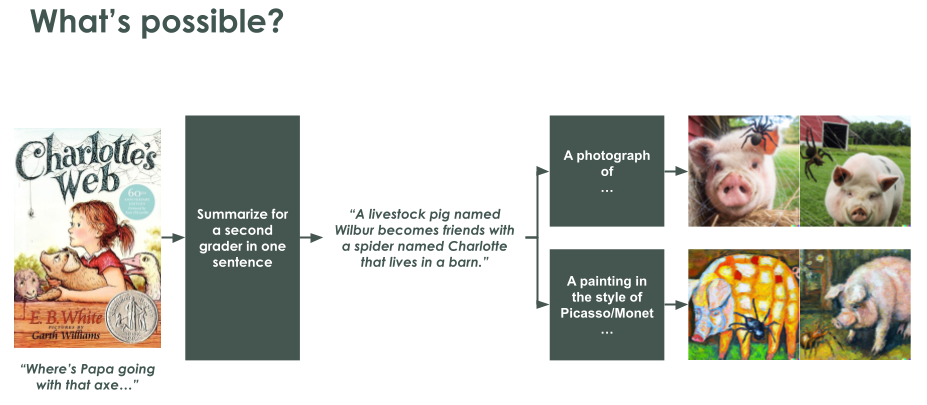

In the era of generation, the mode of operation is divergent. Pretty much any medium can be transformed into any other in a variety of ways. And the same inputs can produce an infinite number of equally valid variant outputs. Text can be generated, summarized, or translated into other text. It can cross formats and be spoken audibly or visualized as an image or video. The reverse is also true: audio can be transcribed, videos can be captioned, and imagery can create more imagery. All these can be combined into compound transformations. Never has the phrase “the possibilities are endless” felt so apt.

Caption: Alternative book cover art created by generating images from a generated summary of the book’s text. The same process could be applied to any book. Credit to GPT-3, DALL-E 2 and our very own Maggie Basta (as well as E.B. White?)

Foundation Models Transform How AI Entrepreneurs Build Companies

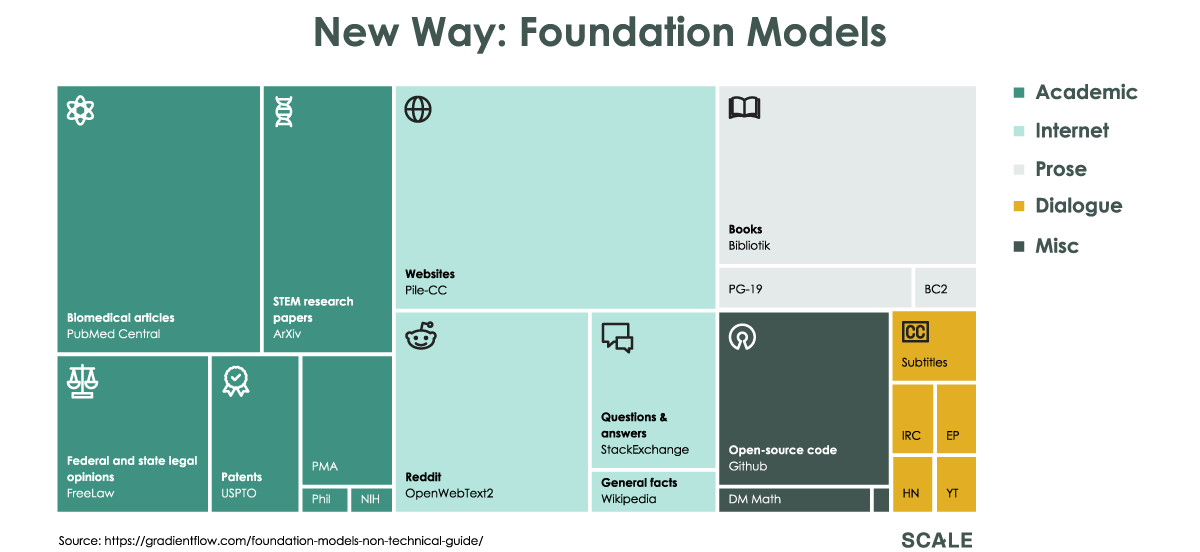

For AI entrepreneurs, the era of understanding was characterized by “do-it-yourself AI.” Until very recently, the individual startup was responsible for gathering training data (laborious), carefully hand labeling the data (expensive), and then building their own model (requires ML engineers). There was no way to harness the magic of AI without this effort. This is why AI investors historically focused heavily on both the quality of the underlying training data (see “Data is Not the New Oil” by Zetta VP and “The Empty Promise of Data Moats” by A16Z) and the raw talent of the machine learning engineers.

Foundation models offer a new way of adding AI to an application and expand what it means to be an AI entrepreneur. Models like GPT-3, DALL-E 2, and Stable Diffusion have been pre-trained on a massive corpus of data and can be used as a platform for an enormous breadth of AI powered products. Yes, entrepreneurs may still need to fine-tune these general models on their particular domains. But instead of requiring hundreds of thousands of specialized documents and AI expertise, they may only need a thousand such documents. Every AI-entrepreneur now holds a “golden ticket” to the world’s data in their back pocket.

The market has already proven out the power of foundation models for entrepreneurs: one of the largest and fastest growing startups of the generative AI era, Jasper AI, exploded to significant revenue before even hiring a full ML team.

The real significance of foundation models is that they can encapsulate all accessible human knowledge and do so in a new way. Google’s search engine accomplishes its mission to “organize the world’s information and make it universally accessible and useful” by viewing everything possible and saving important bits and pointers to source. The result is “well over 100M GBs in size.” While these foundation models also ingest everything they can (Stable Diffusion looked at 600M or so images) they don’t save copies of them. Instead they maintain a single representation that is influenced by each image. Where Google’s index is estimated to be 100M GBs in size, Stable Diffusion is just 4GB and can fit on a DVD (I know we just dated ourselves).

Where is the Money in the Era of Generation?

One of the biggest unanswered questions in this era of generation is who will ultimately extract the lion’s share of the value created by these generative processes?

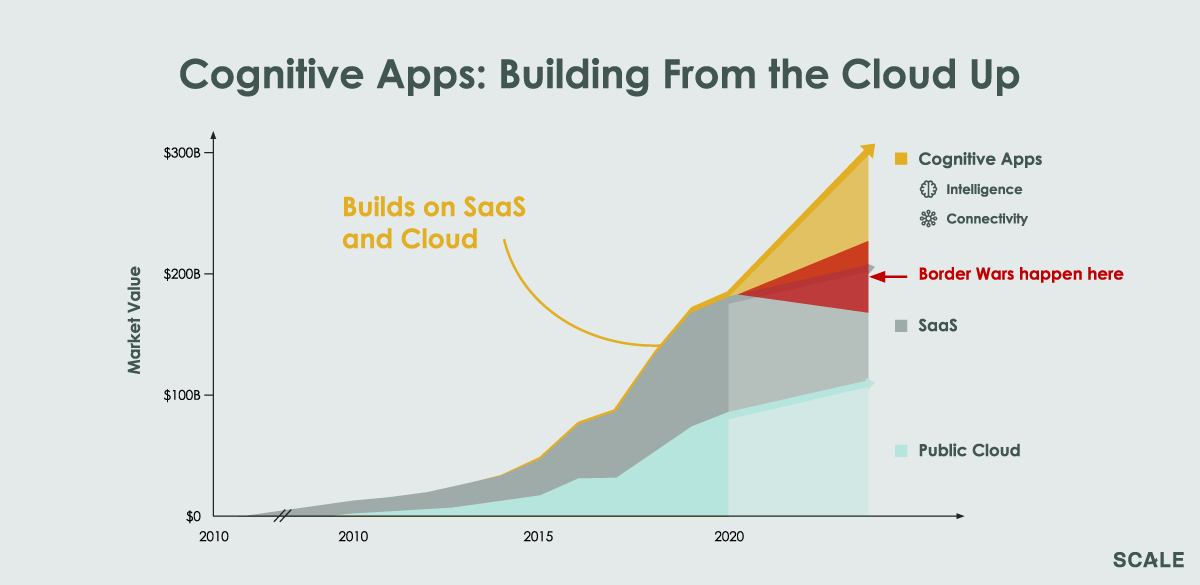

In the previous era of understanding, we often saw a fierce “border war” between the existing system of record (e.g. Salesforce or ServiceNow) and the newly built cognitive applications that sat on top of it and attempted to wedge their way into the stack by automating various processes (think of customer support chatbots like Solvvy and Ada Support, or ITSM automation companies like Moveworks sitting on top of ServiceNow).

The analogous situation in this era of generation is the “border war” between the companies which have built the foundation models themselves (the infrastructure layer) – like Cohere, Open AI, and Stability AI – and the crop of startups sitting on top of these complex models and integrating into various business processes like Jasper AI, Runway ML, and Regie AI. After all, the companies that have invested millions of dollars in building these incredible foundation models need to figure out how they can best monetize their creation, while the Runway MLs of the world start off with the advantage of being closer to the end user. Grab some popcorn and get ready for the inevitable border wars.

Strategies for Startups Building In the Era of Foundation Models

So what strategies are available to entrepreneurs to capture the tremendous value created by these generative apps? Below we outline several approaches we’ve seen to date, recognizing that this list is in no means comprehensive and that entrepreneurs fighting in the trenches of the generative AI border wars will likely make use of multiple strategies. In fact, these blueprints are listed in descending order of the new cognitive apps stack: starting with the application UI, then at the intersection of the application and model, and lastly at the level of the foundation model itself.

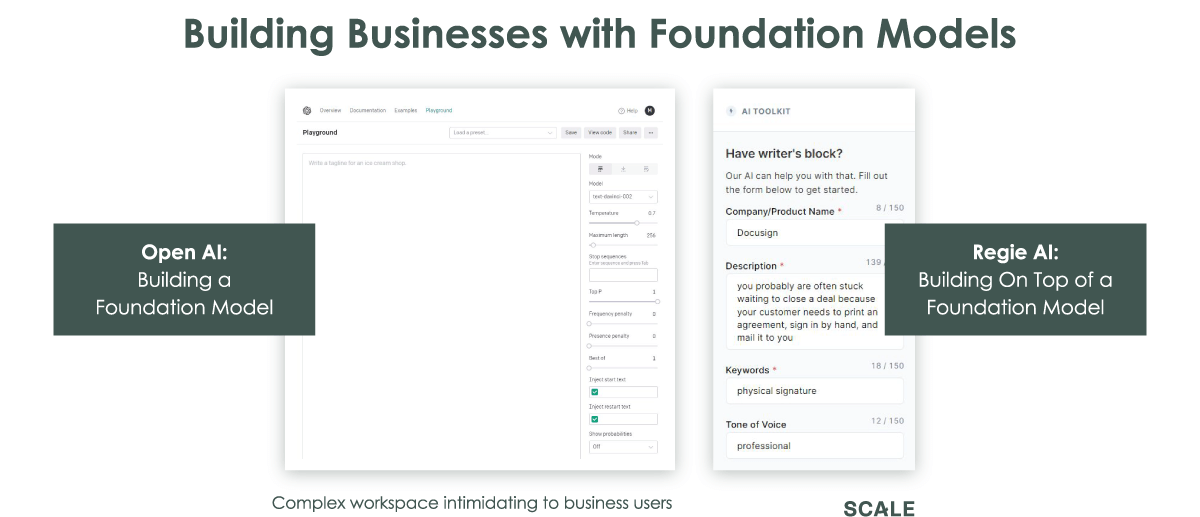

1. Building a more accessible UI

This allows business users to interact with the underlying model. For example, companies like Regie AI and Jasper AI recognized that sales professionals and marketers looking to generate sales cadence emails and blogposts would likely find it too complex to interact with the interface of GPT-3, which is built for a developer. These companies help business users interact with generative models in a more structured and guided way, eliminating the need for a non-technical user to have to make model tuning decisions around “temperature,” “frequence penalty,” and “presence penalty”, all choices that show up in the Open AI interface.

In fact, one of the most powerful strategies seems to be taking business users out of the complex world of prompt design and prompt engineering, helping ordinary people structure their inputs in a way that is most likely to lead to a good model output. The magazine Cosmopolitan describes the hours they spent trying to engineer a DALL-E prompt that would produce an up-to-snuff magazine cover. The winning prompt “a strong female president astronaut warrior walking on the planet Mars, digital art synthwave” is not exactly the kind of thing a novice could generate.

At the end of the day, prosumers and professionals interested in using foundation models for their real world applications are unlikely to be interested in learning to prompt engineer or tune a model. Broad adoption will require applications that productize best practices and fit nicely into existing workflow.

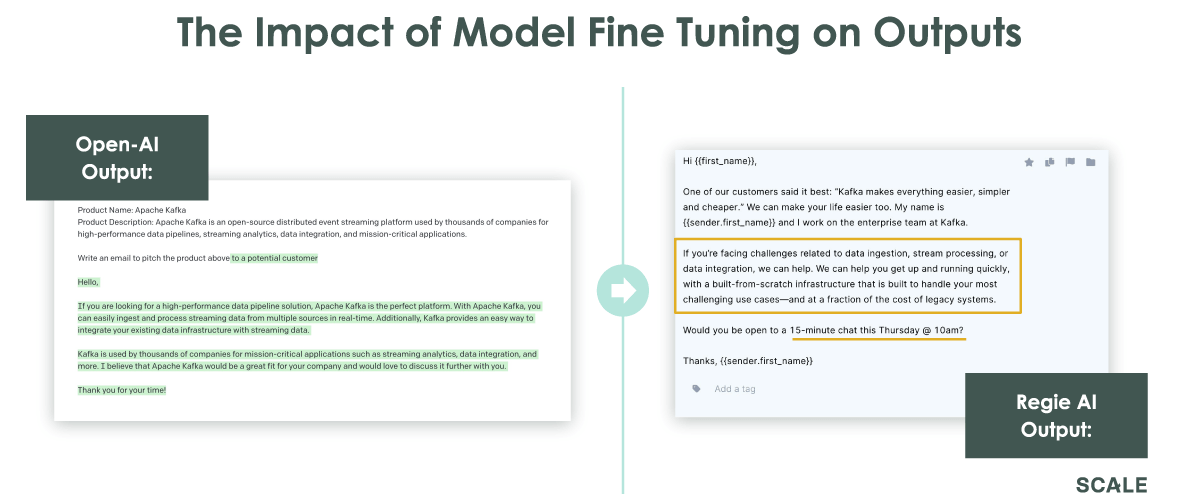

2. Fine Tuning a Generative Model on a Particular Dataset

Open AI first began allowing developers to create GPT-3 models tailored to the specific content in their apps and services back in December of 2021. This process is called fine tuning, and results from passing specific examples to adjust the billions of weights within the network to make it more performant for the domain in question. Fine tuning simply enables the entrepreneur to build a more accurate domain-specific product.

For example, Regie AI built a fine tuned model trained specifically on thousands of sales emails. Fine-tuning means that Regie knows the best performing tone and length to take in sales emails. Moreover, Regie’s generative tool has been exposed to the weird nuances that exist in the world of sales emails, such as whether or not to suggest a time for a meeting to a prospect, and the very human power dynamics that implies.

One of the biggest question areas for entrepreneurs to watch going forward is the velocity of improvement in subsequent versions of foundation models. Today, fine tuning on domain-specific data is one of the key ways to properly “steer” the model and stop it from generating gibberish. The extent to which fine tuning is needed in the future will closely parallel the rate of improvement in underlying models like Stable Diffusion and GPT-3. We’re watching this pattern closely as it will determine the extent to which the locus of value moves from the vertical to the horizontal, and whether fine tuning is a credible route to defensibility in the generative AI space.

3. Building (and even open-sourcing) a model

It is easy to see how proprietary models like DALL-E and GPT-3 can make money, but what about Stability.ai, the open source darling that helped create Stable Diffusion? Stability, as we’ll call it, was only published six weeks ago and it has rocked the developer community. It turns out there is much more demand for experimenting with foundation models than proprietary models allow. Developers are flocking to Stable and extending Stability’s influence into new areas like image compression, animation, infinite video, textual inversion, alternative web UIs, support for mac GPUs, and support for intel CPUs, faster than proprietary vendors can build products.

As these efforts mature into actual products, many will look to Stability to define the standard version, one they can offer as a service. As users’ apps go into production, ops teams will want a vendor to ensure model security, uptime, support and we bet Stability will be the first place they look.

In every heavy engineering domain, be it systems (Unix, Linux, Docker, Kubernetes), or in data, (Hadoop, Spark, Kafka, DBT) builders have innovated faster in open source, formed communities and built big businesses. But don’t count out the others. If history is any indication, open source standards in big markets like this typically exist alongside a proprietary leader: Snowflake/Databricks, iOS/Android, Windows/Linux.

How Company Building Evolves With the Rise of Foundation Models

Moving beyond the fight over value capture in the generative era, it’s worth reflecting more deeply on how building and scaling cognitive applications will change as foundation models become a standard part of the entrepreneur’s toolkit.

Increase in Speed to Market: Foundation models enable entrepreneurs to hack together the MVP for an ML-enabled product more quickly and cheaply than ever before. With a supersized portion of the world’s training data sitting in the back pocket of founders, these newer companies built on top of these foundation models have a massive head start relative to all the labeling and manual configuration that marked the do-it-yourself AI era.

The Rise of the Bottoms-Up GTM Motion In ML: Historically, many cognitive applications in the era of do-it-yourself AI sold tops-down at higher price points. This was to compensate for the fact that time to value in ML has historically taken longer given the need to access vast quantities of company-specific data and then build company-specific models. In the new era, foundation-model-enabled companies sell directly to individual users with lower price points and then upsell with enterprise-wide purchases. It’s not surprising that when a larger share of the training data now comes from the foundation model itself vs. the customer specific data, time to value can be improved, thus enabling the classic software bottoms-up sale.

Model ≠ Product: Nat Friedman and Daniel Gross are correct to highlight all the challenges that still remain in the generative AI space, particularly the vast gap that still exists between using these models in academic research vs. actually embedding them in business workflows without causing user frustration. We agree that ultimately entrepreneurs must remember that “the model is not the product. It is an enabling technology that allows new products to be built” and that “entrepreneurs need to understand both what the models can do, and what people actually want to use.” Just because you have access to an amazing model, doesn’t mean you get a pass on understanding the needs of your user.

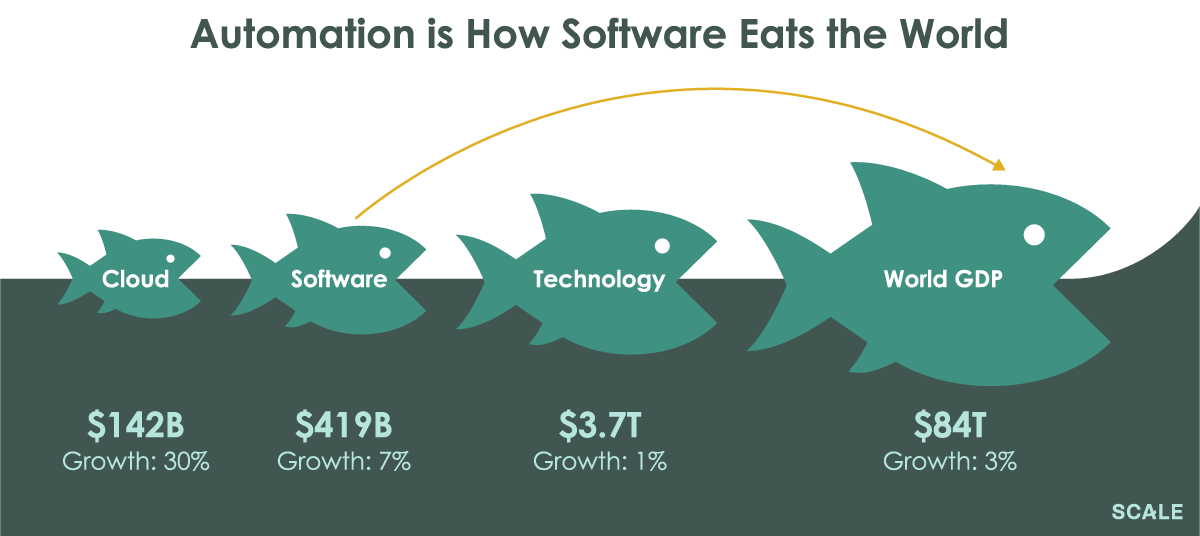

With Generative AI, Software Might Actually Eat the World

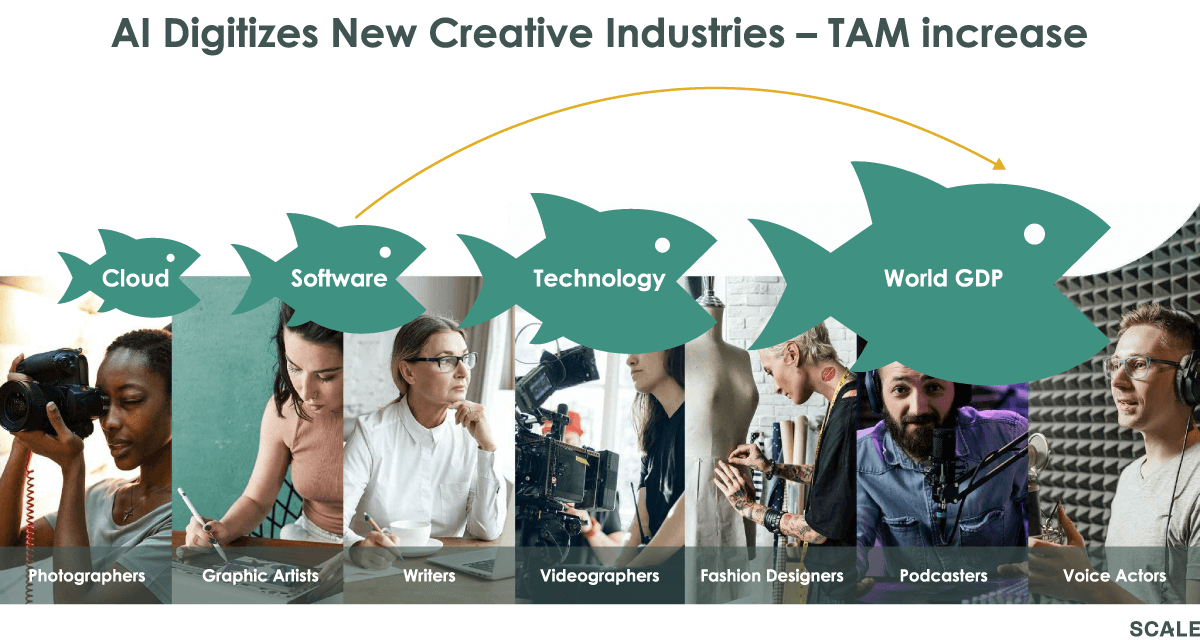

Generative AI is quickly becoming a canonical example of how automation allows software companies to enter markets where software has never gone before. More than two decades after the founding of Salesforce, cloud software has already eaten a large chunk of traditional on-prem software spend and advances in AI are now giving software companies the ability to directly feast on previously untapped categories of spend.

Our partner Rory O’Driscoll previously described an upcoming era he termed the SaaS Hunger Games, writing that in a world of more cloud-on-cloud competition, the next generation of software companies should focus on reaching deep into the “real world” and earning dollars by automating, or eating, the work that today is outside the scope of current technology spend. Generative AI is simply one of the most successful examples of this approach we’ve seen to date.

Today, human voice actors are in direct competition with the text-to-speech capabilities of synthetic speech companies like Wellsaid Labs and Resemble AI. Traditional corporate videographers are going head-to-head against synthetic video companies like Synthesia and HourOne which produce corporate training videos at 1/60th the cost of traditional videography. With very little advanced warning, AI is in the midst of digitally transforming creative industries many had incorrectly assumed were safe from the long-term trend of software eating the world.

While the societal and artistic impacts of this phenomenon are certainly worth a much longer discussion amid all the attention they are getting from the popular press (check out the New York Times’ Kevin Roose’s piece and Humberto Moreira’s Crafting the Hyperreal), it is clear that the generative AI era is only in its first inning and there will be no going back.

In the midst of such exciting innovation, Scale couldn’t be more thrilled to double down on our focus on machine learning with a new $900M fund and play an active role in supporting the founders building companies on the frontier of foundation models and generative AI.

Thank you to our colleagues Max Abram and Maggie Basta for their many contributions to this piece and for their thought leadership. Max wrote an early draft of this post and was an essential editor, while Maggie is well on her way to becoming a full-fledged AI artist!

For further questions, please reach out to Jeremy Kaufmann (jeremyk@scalevp.com) and Eric Anderson (eric@scalevp.com). If you are a builder in the generative AI space, we’d love to hear from you!